July 31, 2019 report

Another step toward creating brain-reading technology

A team of researchers at the University of California's Department of Neurological Surgery and the Center for Integrative Neuroscience in San Francisco has taken another step toward the development of a device able to read a person's mind. In their paper published in the journal Nature Communications, the Facebook-funded group describes their work with epilepsy patients and the technology they developed that allowed them to read some human thoughts.

Technology to peer inside the human mind to monitor thoughts has been explored in science fiction for years. But in recent years, scientists have made important strides toward creating such devices. For example, brain-computer interfaces currently exist that allow a person to spell out words using a virtual keyboard. But it is slow going. In this new effort, the researchers report that they were able to read some complete words from the minds of epilepsy patients.

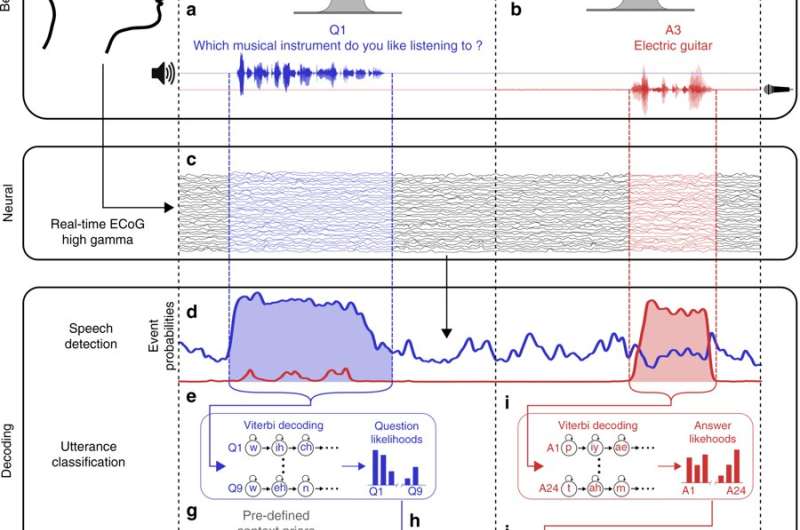

The researchers worked with volunteer epilepsy patients who were already scheduled to have probes implanted in their brains. The volunteers listened to a sequence of questions and responded with canned answers. They then used the data from the brain probes to teach a detection and coding system to recognize words based on brainwave patterns. But instead of treating hearing and responding as separate entities, the researchers used them together. This allowed their system to use context to determine which word was being spoken. As an example, if the user was thinking the word "yes," it would be helpful to know if they were responding to a yes/no question and that there were only two possible answers.

The researchers report that their system was able to discern the difference between a brain that was listening and one engaged in forming internal words. They further report that their system was able to decode brainwaves with an accuracy of 61 percent when decoding listening and 76 percent when decoding produced words. They acknowledge that their system was very simplified, but suggest it provides a proof of concept for larger studies. They also note that more work is required before a non-invasive probe could produce similar results.

More information: David A. Moses et al. Real-time decoding of question-and-answer speech dialogue using human cortical activity, Nature Communications (2019). DOI: 10.1038/s41467-019-10994-4

© 2019 Science X Network